A Blueprint for Enterprise Generative AI Strategy

Integrating Generative AI into enterprise workflows is no longer a luxury, it's a necessity. Our latest article delves into the critical components of a robust Generative AI strategy, offering insights into how businesses can harness these technologies for enhanced efficiency and innovation.

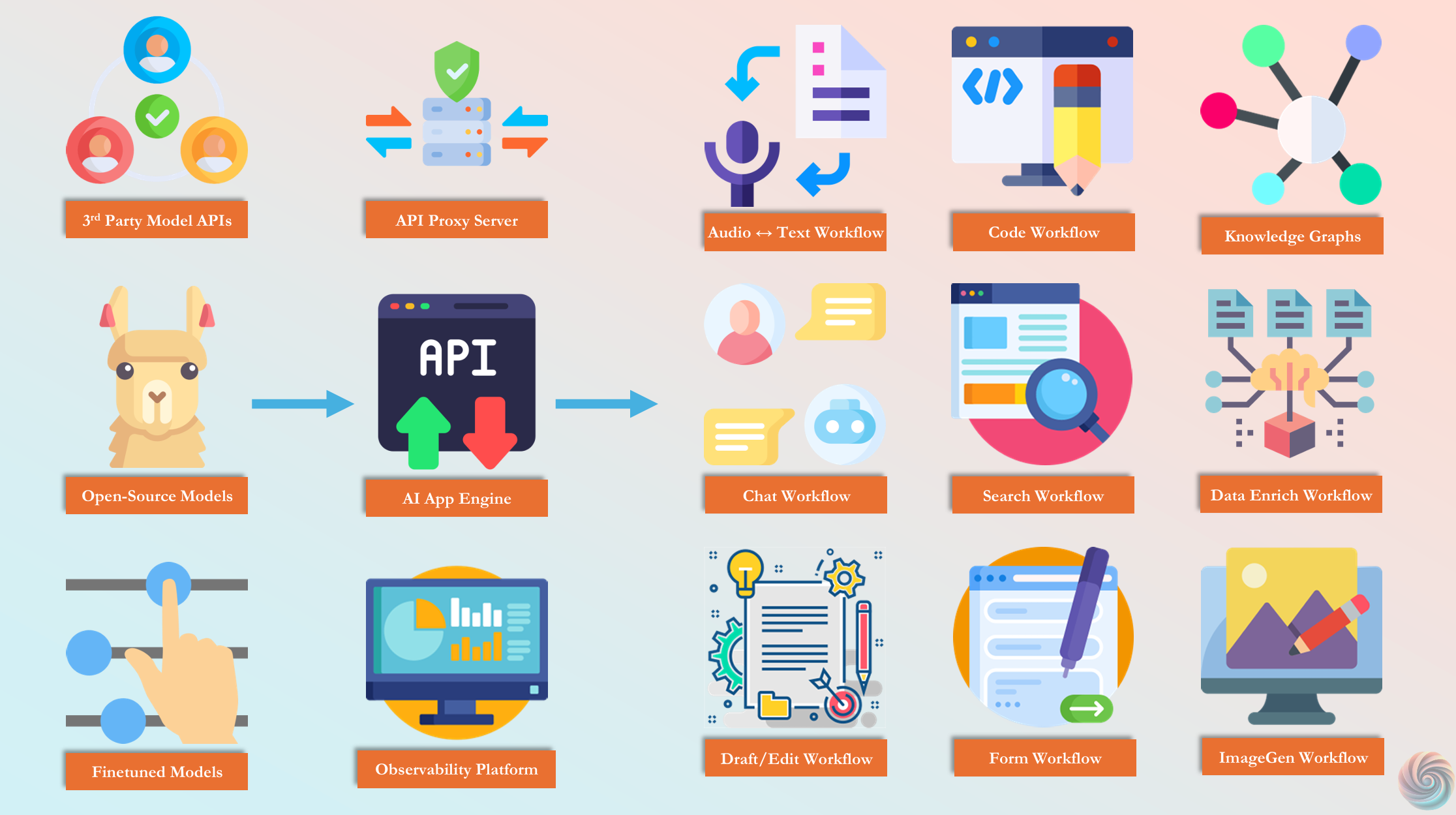

Building a Resilient and Agile Ecosystem

At DialectAI, we have seen firsthand the challenges and opportunities presented by emerging Generative AI projects. Across diverse engagements, clients consistently face hurdles integrating multiple libraries, managing disparate systems, and ensuring smooth transitions between various LLM providers. These experiences reveal that establishing a robust Generative AI strategy is less about isolated technical components and more about constructing an integrated ecosystem that bridges technology, process, and security.

This article is a compendium of various strategic components, current generation technologies, and best practices essential for architecting a resilient, agile, and secure Generative AI ecosystem. By weaving together insights from cloud services, AI proxy architectures, vector databases, observability frameworks, and robust security protocols, it provides a holistic guide for enterprises navigating the complex landscape of generative AI. Through in-depth analysis and real-world examples, we will discover how to seamlessly integrate these components into your enterprise technology stack.

The Role of AI Proxy and API Routing

Integrating multiple LLM API providers means juggling different libraries (openai, claude-sdk, Azure OpenAI, AWS Bedrock, Google Vertex), each with its own proprietary interface, streaming support quirks, migrations and version conflicts (we are pointing especially at Langchain and Azure OpenAI).

There are many excellent proxy server options to choose from, for example, OpenRouter, LLMRouter, RouteLLM, and Portkey. Without a centralized AI proxy or LLM router, each system would need its own integration logic, complicating development and hindering flexibility. The lack of such a layer can lead to vendor lock-in and high switching costs if a provider needs to be changed down the road.

Key Benefits:

- Simplified Integration: A unified interface abstracts the differences between API providers, reducing custom integration code.

- Flexibility & Agility: Makes it easier to swap or upgrade services without major system rewrites.

- Enhanced Security: Centralizes authentication, authorization, and RBAC to protect each API endpoint.

Leveraging Cloud Generative AI Providers

Managed generative AI services, such as those offered by Azure OpenAI, AWS Bedrock, Cloudflare, and Google Vertex AI, deliver not only cutting-edge multimodal AI capabilities but also the reliability, compliance, and scalability that modern enterprises demand. These platforms integrate seamlessly with your existing cloud infrastructure, ensuring smooth transitions from development through testing to production. Without access to these managed services, enterprises may face significant scalability challenges and operational downtimes that could disrupt critical business functions.

Additionally, as GPU costs continue to rise and usage patterns remain intermittent, making a case for self-hosting large language models becomes difficult except for those rare, high-volume, round-the-clock scenarios. In this context, cloud-based generative AI services are not just an option; they are essential.

Key Benefits:

- Scalability: Rapidly scale AI workloads globally with robust infrastructure.

- Integrated Ecosystems: Seamless connection to broader cloud services and compliance frameworks.

- Operational Efficiency: Managed services reduce maintenance overhead, allowing teams to focus on innovation.

Custom and Self-Hosted LLM API Servers

For organizations with unique or data-sensitive requirements, custom or self-hosted solutions using the Hugging Face ecosystem (Transformers, bitsandbytes, Accelerate, TRL, PEFT) and deployment optimizations via TensorRT, SGLang, vLLM, Triton Inference Server, and others offer the required flexibility and control. Without these tailored deployments, companies may be forced into one-size-fits-all solutions that don’t meet their specialized needs, potentially resulting in suboptimal performance or higher costs.

Key Benefits:

- Customization: Tailor AI models to address industry-specific challenges and nuances.

- Cost Management: Potential for reduced long-term costs by avoiding recurring managed service fees.

- Performance Tuning: Optimize models to achieve better inference performance and resource utilization.

Observability: Beyond Technical Monitoring

An effective observability stack is crucial not only for tracking system health via tools like LangSmith, Lunary, Datadog, Helicone, and Traceloop but also for collecting process data on LLM usage across enterprise applications. This information is invaluable for identifying operational inefficiencies, uncovering skill gaps, and pinpointing process shortcomings that hinder strategic growth. Without comprehensive observability, organizations risk operating blind, with system anomalies or underperforming components going undetected until they cause significant disruptions.

Key Benefits:

- Actionable Insights: Enables teams to identify bottlenecks and areas for process improvement.

- Proactive Maintenance: Early detection of anomalies prevents downtime and preserves system integrity.

- Strategic Planning: Data-driven visibility across usage patterns can reveal untapped opportunities and hidden inefficiencies.

Robust Guardrails and Ethical AI

The implementation of robust safety and moderation tools, such as LLMGuard, Guardrails.ai, NeMo Guardrails, OpenAI’s Moderation, and LlamaGuard, is essential to enforce ethical AI practices and protect organizational reputation. Without these guardrails, the risk of deploying biased or harmful outputs increases, which can have significant legal, reputational, and operational repercussions.

For example, there are several stories of successful jailbreaking attempts documented on X/Twitter, especially by @Pliny the Liberator revealing the brittleness in Generative AI workflows.

These models or API services are very economical to deploy and performant while identifying toxic instances (the OpenAI moderation API is in fact free to use), many Kagglers might remember working on Jigsaw's toxicity competitions on Kaggle circa 2018 solving similar problems in various competitions.

Key Benefits:

- Risk Management: Minimizes exposure to ethically and legally problematic outputs.

- Trust Building: Cultivates stakeholder confidence by ensuring responsible AI behavior.

- Compliance Assurance: Helps meet regulatory and industry-specific guidelines.

Enabling Agentic Behavior for Operational Agility

Agentic features enable Generative AI systems to take autonomous actions such as web searches, file system access, API requests, database queries, storage buckets or version control systems. Tools including Goose & Cline empower AI to not only generate content but also interact with external systems, thereby automating routine tasks. Without these functionalities, enterprises may not fully leverage automation potential, leaving significant efficiency gains on the table.

Key Benefits:

- Operational Efficiency: Automates complex workflows and routine data collection.

- Enhanced Integration: Bridges the gap between AI and existing enterprise tools.

- Innovation Acceleration: Frees up human resources to focus on strategic work rather than repetitive tasks.

The Backbone: Vector Databases

Modern AI applications frequently depend on vector databases such as Milvus, Pinecone, Weaviate, Qdrant and many others, to perform rapid and efficient similarity searches, gather relevant context, and manage high-dimensional data. These databases leverage embedding models to index diverse contextual information from multiple sources, ensuring that critical data is readily available when needed in AI workflows. Without this component, data retrieval can become sluggish and the precision of AI-driven insights may diminish, ultimately hampering user experience and decision-making.

Imagine trying to architect a customer service chatbot that lacks the ability to swiftly access and sift through standard operating procedures, detailed product documentation, and customer history, all of which are essential for delivering accurate, context-aware responses. Without a robust vector database, even the most advanced AI model could struggle to provide the timely and relevant assistance your customers expect.

Key Benefits:

- High-Speed Retrieval: Enables real-time access to complex, multidimensional data.

- Improved Accuracy: Enhances recommendation systems and semantic search applications.

- Scalability: Efficiently manages large volumes of data as demands grow.

Empowering Teams with No Code & Low Code AI Workflow Platforms

Platforms such as CrewAI, Gumloop, Langflow and Azure Promptflow lower the barrier to AI adoption by enabling non-technical teams to participate in building and deploying Generative AI workflows. While these tools accelerate innovation, without standardization, they risk creating fragmented processes and potential security loopholes. Many of these platforms make it easy to deploy these workflows as API services with built-in security making deployment, integration and maintenance a breeze.

Key Benefits:

- Rapid Prototyping: Accelerates development cycles and fosters experimental innovation.

- Democratized Access: Allows cross-functional teams to contribute without deep technical expertise.

- Streamlined Workflows: Reduces reliance on specialized developers for every step of AI deployment.

Additional Enhancements: Broadening Capabilities

Enterprises looking to extend their Generative AI capabilities can integrate additional enhancements such as centralized knowledge graphs, knowledge databases, multimodal functionalities, specialized coding models and vector stores on internal production codebases, speech transcription and generation, and image generation and query capabilities.

While each component brings its unique benefits, their improper integration can add complexity rather than value. A thoughtful strategy ensures that these enhancements complement the core framework without compromising system integrity.

Key Benefits:

- Enhanced Versatility: Supports diverse data types and creative applications.

- Innovation Edge: Opens avenues for advanced research and new product lines.

- Future-Proofing: Keeps the ecosystem adaptable to emerging trends and technologies.

The Security Imperative:

Authentication, Authorization, and RBAC

Across the entire technology stack, robust authentication, authorization, and Role-Based Access Control are non-negotiable. They form the security fabric that binds every component, ensuring that from API proxies and cloud providers to custom models and observability tools, every access point is secure. Neglecting these measures exposes the enterprise to significant risks, including data breaches and unauthorized manipulations.

Example scenario from not so long ago:

An HR specialist uploaded a confidential salary spreadsheet to a SharePoint folder with default permissions. Copilot indexed it under the tenant-level index. Later, a junior employee asked Copilot: "What's the salary range for senior engineers?"

Copilot responded with exact figures from the spreadsheet, despite the junior employee lacking direct folder access. Read more about this story below.

Key Benefits:

- Enhanced Security: Protects critical data and systems from unauthorized access.

- Operational Integrity: Minimizes the risk of disruption due to security incidents.

- Regulatory Compliance: Helps meet stringent industry and regional security standards.

Be it scaling finetuned LLM inference or integrating AI into complicated workflows and processes, with DialectAI as your trusted partner, you can efficiently transform your Generative AI strategy into a secure, scalable, and economically competitive backbone for your enterprise workflows.

Ready to unlock the true potential of your AI ecosystem? Contact DialectAI today for a consultation and discover how our custom Generative AI solutions and services can transform your business processes: from manual, fragmented, error-prone tasks to streamlined, integrated and automated workflows.

Feel free to share this article with your colleagues or reach out in the comments below if you have any questions or would like to explore specific Generative AI solutions.