Enterprise Conversational AI Assistant

Customizable conversational AI assistants are becoming essential for enterprises, boosting productivity while keeping sensitive data secure. With features like SSO integration, RBAC, internal knowledge access, and model flexibility, they streamline work without data security concerns.

Enhancing Enterprise Productivity with Customizable Conversational AI Assistants

In today’s fast-paced business environment, staying ahead means not only optimizing workflows but also ensuring that sensitive communications remain secure and internal. Modern conversational assistants, built on advanced large language models (LLMs), offer an impressive suite of features that can transform how employees and teams collaborate. These systems support dynamic model selection, context-aware code generation, seamless integration with single sign-on (SSO) systems, real-time web search, and even the capacity to execute tool-like tasks during conversations all of which can multiply productivity while safeguarding corporate data.

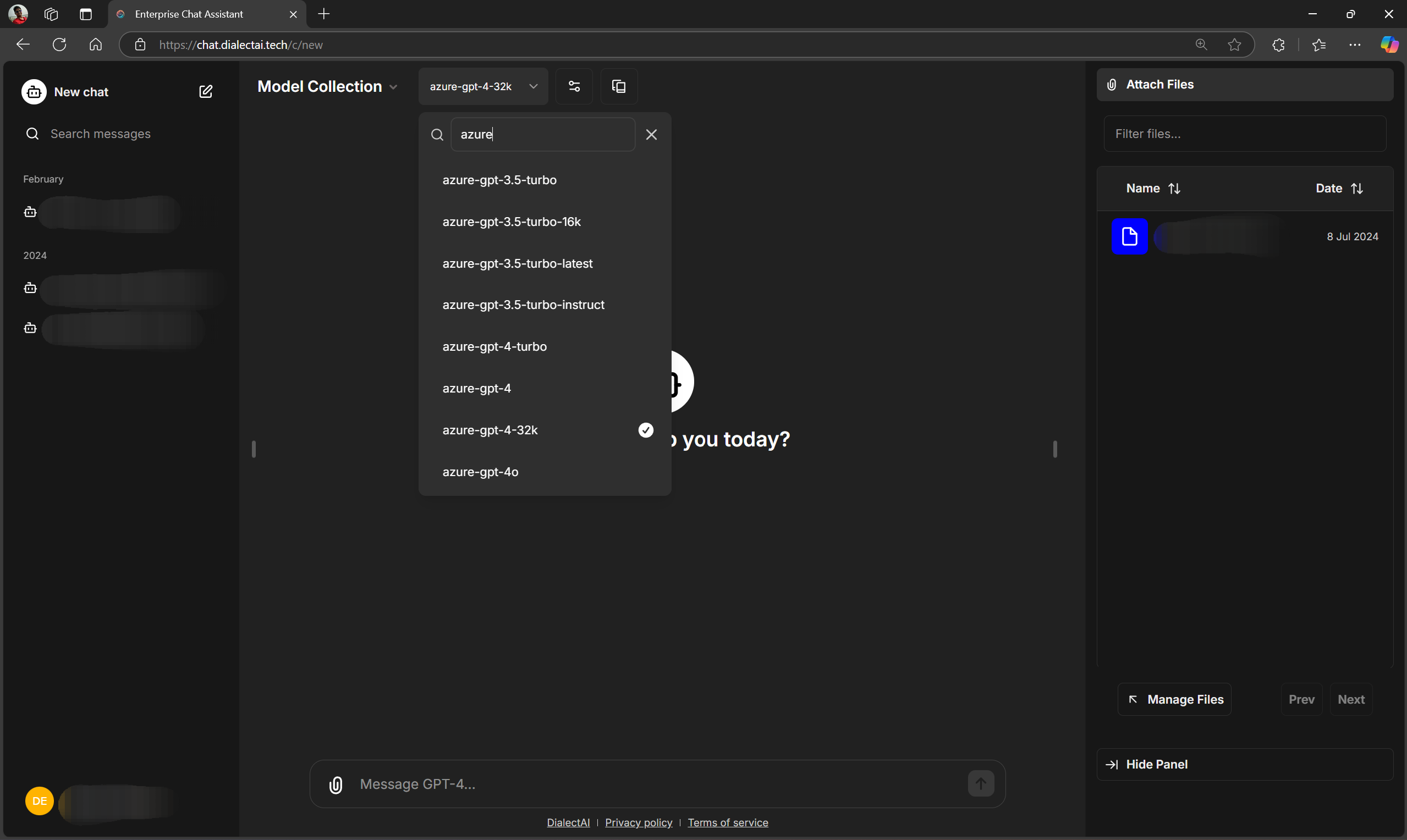

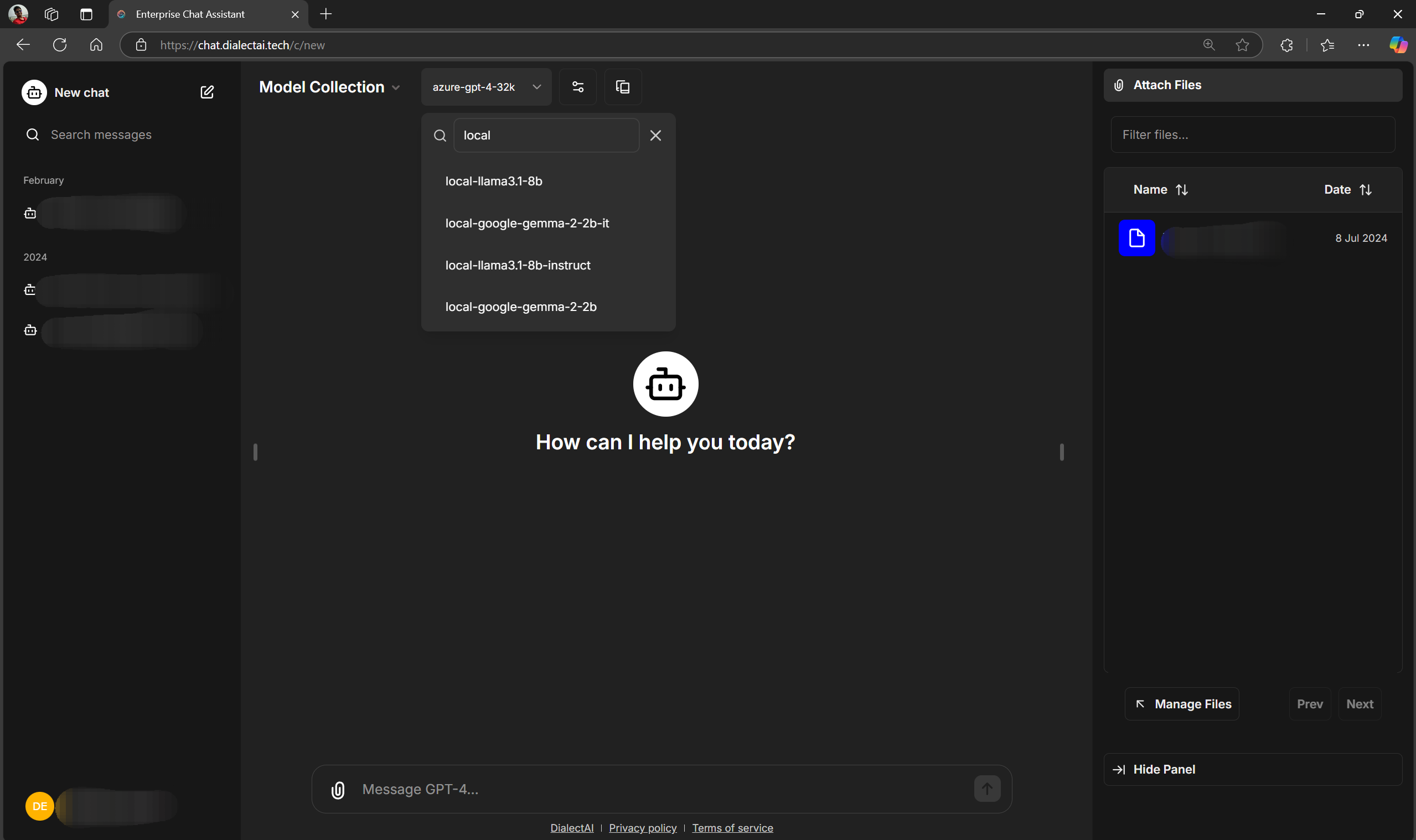

A Flexible Toolbox for Every Need

One of the most striking benefits of modern internal chat systems is the flexibility to switch between different processing engines or “brains” on the fly. For enterprises, this means that if one approach fits a particular domain such as creative brainstorming, technical documentation, or strategic planning the assistant can be quickly reconfigured. Additionally, integrated features that automatically generate code or scripts based on natural language prompts empower technical teams to automate routine tasks without leaving their chat environment.

This modularity also extends to how the systems operate. By embedding features like context-aware knowledge retrieval, employees can navigate disparate documents and data repositories more quickly than ever before. The conversational assistant can link different pieces of internal knowledge, breaking down silos and facilitating creative breakthroughs that emerge from blending diverse inputs.

Security and Data Sovereignty at the Forefront

Enterprises are particularly wary of data being inadvertently exposed to external systems, a concern underscored by several documented cases where sensitive information ended up on public AI platforms. In some instances, employees have unintentionally submitted proprietary code, business strategies, or internal communications into public-facing applications, leading to calls for rigorous safeguards and deletion requests. Even there have been reports of crafty users manipulating prompts to retrieve system secrets or sensitive keys from LLMs. These examples serve as a stark reminder of why data must be managed within the enterprise perimeter.

Melania Watson wrote about this in her article titled "Enterprises face rising risks from generative AI data leaks" on SecurityBrief last month.

Borrowing an important excerpt from her article:

James Robinson, the Chief Information Security Officer of Netskope, commented on the findings, stating: "Despite earnest efforts by organisations to implement company-managed genAI tools, our research shows that shadow IT has turned into shadow AI, with nearly three-quarters of users still accessing genAI apps through personal accounts. This ongoing trend, when combined with the data in which it is being shared, underscores the need for advanced data security capabilities so that security and risk management teams can regain governance, visibility, and acceptable use over genAI usage within their organisations."

By hosting an internal version of these assistants whether through centrally managed, on-premises deployments or controlled cloud environments companies gain full oversight of data flow. This approach ensures that conversational data remains internal and is never repurposed for training external models, a reassurance many cloud LLM & AI technology providers now explicitly offer. During the early days of high-profile public partnerships between tech giants and AI innovators, enterprises watched closely as industry leaders had to publicly clarify that their data would remain isolated from model training processes. This commitment to data sovereignty not only mitigates the risk of confidential leaks but also builds trust among teams that rely on these systems for everyday operations.

Tailored Knowledge Management through Customization

Another powerful aspect of an enterprise-grade conversational assistant is its ability to evolve with the organization. Over time, as managers, team leaders, and employees engage with the assistant, their interactions can be refined through simple fine-tuning or even tailored adapter models to better reflect internal jargon, processes, and best practices. This customized evolution can then be further enhanced by pairing the assistant with a vector store or a knowledge graph that captures the full spectrum of an organization’s public and internal data.

McKinsey & Company's blog about their internal chatbot named Lilli, integrated with their knowledge base is a very good example of the gains possible when this is done correctly.

In practical terms, this means that not only does the assistant help answer employee queries in real time, but it also becomes a repository of institutional memory. As turnover occurs and teams change, the curated interactions continue to serve as a living archive that supports onboarding and ongoing productivity across language and cultural barriers.

Seamless Integration with Enterprise Ecosystems

Beyond the basic functionality, integrating conversational AI platforms into an enterprise setting brings about myriad secondary benefits. The combination of flexible integration modules such as

- Secure SSO,

- Role Based Access Management,

- internal web search (over SharePoint, Notion, Confluence, GitHub), and

- standardized API protocols and tool use (through MCP clients and servers)

means that the conversational assistant can dovetail with existing enterprise software. This lowers the barrier for adoption, as employees can interact in their native languages and with familiar interfaces, reducing miscommunication and speeding up problem-solving.

The platform’s openness (following broadly accepted industry specifications) ensures that if priorities change, enterprises can switch providers without a complete overhaul of their internal systems. This interoperability, alongside robust cost-effectiveness relative to public alternatives, makes the case for an internal solution extremely compelling.

The Business Case for Internal Conversational Assistants

For forward-thinking organizations, the value proposition of deploying a secure, internally managed conversational assistant is clear. By keeping employee communications on-premises or within a tightly controlled cloud environment, companies not only enhance productivity but also safeguard sensitive data an increasingly important consideration in light of several high-profile incidents in recent years. News reports have highlighted instances where enterprise data reached public platforms and even situations where attempts were made to compromise system security through clever prompt manipulations. These instances underscore the risk inherent in relying solely on public-facing solutions.

Moreover, reining in these risks is not just about data security; it’s about preserving intellectual property and maintaining competitive advantage. Secure, internally managed systems guarantee that the innovations and insights generated during everyday conversations stay within the organization, fostering a culture of open, yet safe, collaboration.

Investing in an enterprise-grade conversational assistant built on robust LLM technology promises to unlock productivity gains across diverse business functions. By offering flexible configuration options, secure data management, and the potential for continuous improvement via internal fine-tuning, such systems deliver on the promise of enhanced internal communication and knowledge management. In an era where digital transformation is a competitive necessity, the case for internal AI assistants grows ever stronger a secure and adaptable solution that not only drives efficiency but also protects the enterprise’s most sensitive assets.

Ready to unlock the untapped potential of your internal knowledge bases within your business? Contact DialectAI today for a demo on how we can help set up a custom internal conversational assistant for your needs in a cost-effective manner.

Feel free to share this article with your colleagues or reach out in the comments below if you have any questions or would like to explore specific Generative AI solutions.